A research article on Synecoculture and Augmented Ecosystems has been published.

Masatoshi Funabashi , Sony CSL senior reseacher, presented two research papers at the Complex Computational Ecosystems 2023 in Baku, Azerbaijan, and one of them received the Best Non-Student Presentation Award.

■ [Best Non-Student Presentation Award ] “Vegee Brain Automata: Ultradiscretization of essential chaos transversal in neural and ecosystem dynamics” (Masatoshi Funabashi)

Get the final version manuscript here.

■“Modeling ecosystem management based on the integration of image analysis and human subjective evaluation – Case studies with synecological farming” (Shuntaro Aotake, Atsuo Takanishi, Masatoshi Funabashi)

Get the final version manuscript here.

Both papers have been published in Springer Lecture Notes in Computation Science.

水野大二郎・津田和俊 著『サーキュラーデザイン』の3章「サーキュラーデザインの現在 − 萌芽的事例」で協生農法(Synecoculture)が紹介されています。

Sony CSLより青竹峻太郎・舩橋真俊が参画した、協生農法に関する早稲田大学との共同研究について、以下の通り学会発表を行いました。

2021 年度 第 49 回画像電子学会年次大会

Media Computing Conference 2021

https://www.iieej.org/annualconf/2021nenji-top/

6月26日(土)

●16:00-16:45 学生セッション(S7) 3件 「動物」 (15分/件) 座長:森谷友昭(東京電機大学)

【S7-3】協生農法環境におけるRGB画像からの圃場の優勢植生の深層学習を用いる検出方法に関する研究

〇征矢寛汰(早稲田大学) , 青竹峻太郎(早稲田大学/ソニーコンヒ゜ュータサイエンス研究所), 小方博之(早稲田大学/成蹊大学), 大谷 淳, 大谷拓也, 高西淳夫(早稲田大学), 舩橋真俊(ソニーコンヒ゜ュータサイエンス研究所)

●16:55-17:40 学生セッション(S8) 3件 「動物」 (15分/件) 座長:小林直樹(埼玉医科大学)

【S8-2】協生農法環境におけるRGB画像に対するSemantic Segmentationを用いた圃場の被覆状態の認識方法に関する研究

〇吉崎玲奈(早稲田大学) , 青竹峻太郎(早稲田大学/ソニーコンヒ゜ュータサイエンス研究所), 小方博之(早稲田大学/成蹊大学), 大谷 淳, 大谷拓也, 高西淳夫(早稲田大学), 舩橋真俊(ソニーコンヒ゜ュータサイエンス研究所)

——————————

English :

The Institute of Image Electronics Engineers of Japan(IIEEJ)

Media Computing Conference 2021

6/26

Student Session(S7) “Animal Session”

●【S7-3】 Study of a method for detecting dominant vegetation in a field from RGB images using deep learning in Synecoculture environment

〇Kanta SOYA*1, Shuntaro AOTAKE*2/*3, Hiroyuki Ogata*4/*5, Jun OHYA*6, Takuya OHTANI*7, Atsuo TAKANISHI*6, Masatoshi FUNABASHI*3

*1 Dept. of Modern Mechanical Engineering, WASEDA University, *2 Dept. of Advanced Science and Engineering, WASEDA University, *3 Sony Computer Science Laboratories, Inc, *4 Waseda University, Future Robotics Organization, *5 Dept. of System Design, SEIKEI University, *6 Dept. of Modern Mechanical Engineering, WASEDA University, *7 Waseda Research Institute for Science and Engineering

Student Session(S8) ”Animal Session”

●【S8-2】 Study of a Method for Recognizing Field Covering Situation by Applying Semantic Segmentation to RGB Images in Synecoculture Environment

〇Reina YOSHIZAKI1,Shuntaro AOTAKE2,3,Hiroyuki OGATA4,5,Jun OHYA1,Takuya OHTANI6,Atsuo TAKANISHI1,Masatoshi FUNABASHI3

1 Graduate School of Creative Science and Engineering, Waseda University,2 Graduate School of Advanced Science and Engineering, Waseda University,3 Sony Computer Science Laboratories, Inc.,4 Faculty of Science and Technology, Seikei University,5 Waseda University, Future Robotics Organization,6 Waseda Research Institute for Science and Engineering

The following article was published from the MDPI journal Agriculture, in the Special Issue Secondary Metabolites in Plant-Microbe Interactions:

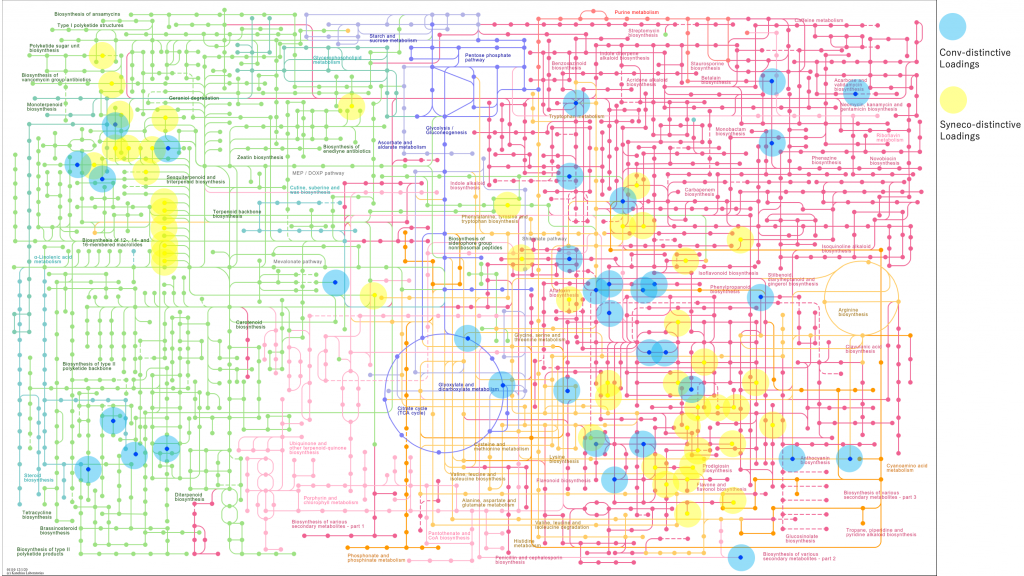

Ohta, K.; Kawaoka, T.; Funabashi, M. Secondary Metabolite Differences between Naturally Grown and Conventional Coarse Green Tea. Agriculture2020, 10, 632.

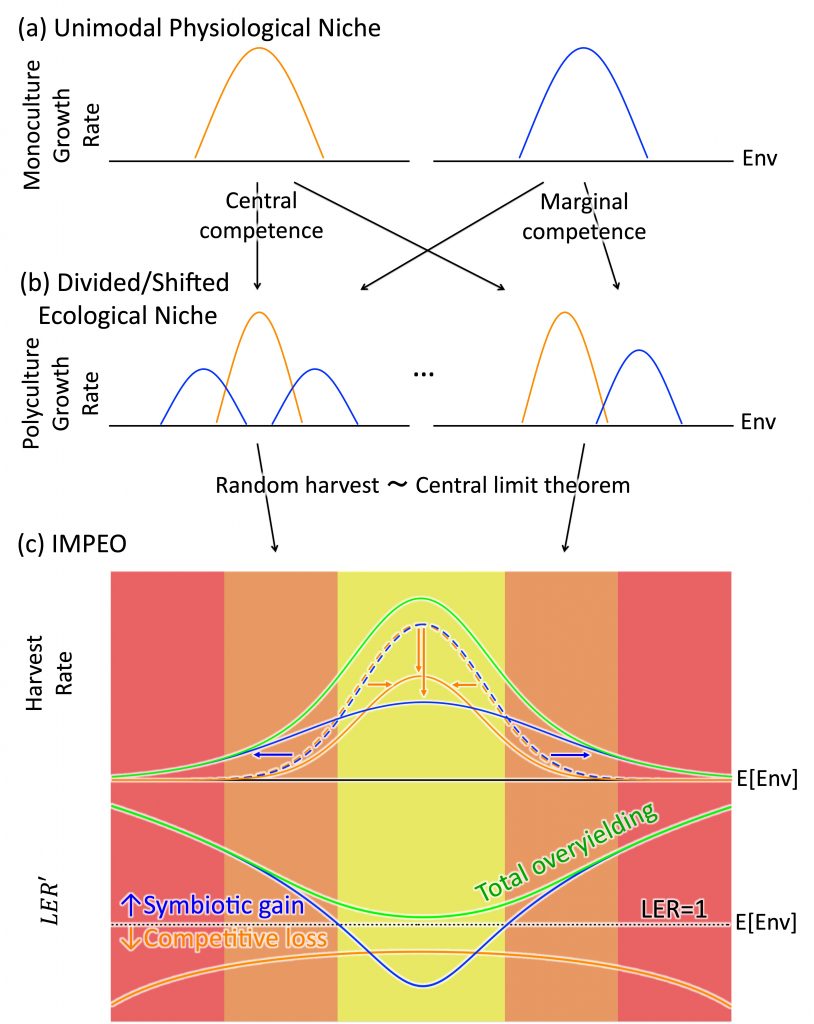

The following preprint was published: “Power-law productivity and positive regime shift of symbiotic and climate-resilient edible ecosystems” by Masatoshi Funabashi.

Un ouvrage issu d’un colloque de Cerisy (2017) est publié d’Éditions Hermann, dans lequel Masatoshi Funabashi a contribué un chapître “La gestion agro-environnementale: Les atouts de la synécoculture.”